Ed’s Note: This is an extract from the forthcoming book C++ Concurrency in Action by Anthony Williams. For Source Code, Sample Chapters, the Author Forum and other resources, go to http://www.manning.com/williams/

There are six memory ordering options that can be applied to operations on atomic types: memory_order_relaxed, memory_order_consume, memory_order_acquire, memory_order_release, memory_order_acq_rel, and memory_order_seq_cst. Unless you specify otherwise for a particular operation, the memory-ordering option for all operations on atomic types is memory_order_seq_cst, which is the most stringent of the available options. Although there are six ordering options, they represent three models: sequentially-consistent ordering (memory_order_seq_cst), acquire-release ordering (memory_order_consume, memory_order_acquire, memory_order_release, and memory_order_acq_rel), and relaxed ordering (memory_order_relaxed).

These distinct memory-ordering models can have varying costs on different CPU architectures. For example, on systems based on architectures with fine control over the visibility of operations by processors other than the one that made the change, additional synchronization instructions can be required for sequentially consistent ordering over acquire-release ordering or relaxed ordering and for acquire-release ordering over relaxed ordering. If these systems have many processors, then these additional synchronization instructions may take a significant amount of time, thus reducing the overall performance of the system. On the other hand, CPUs that use the x86 or x86-64 architectures (such as the Intel and AMD processors common in desktop PCs) do not require any additional instructions for acquire-release ordering beyond those necessary for ensuring atomicity, and even sequentially consistent ordering doesn’t require any special treatment for load operations, although there’s a small additional cost on stores.

The availability of the distinct memory-ordering models allows experts to take advantage of the increased performance of the more fine-grained ordering relationships and use the default sequentially consistent ordering (which is considerably easier to reason about than the others) for those cases that are less critical.

In order to choose the ordering model or to understand the ordering relationships in code that uses the different models, it’s important to know how the choices affect the program behaviour. Let’s, therefore, look at the consequences of each choice for operation ordering and synchronizes-with.

Sequentially Consistent Ordering

The default ordering is named sequentially consistent because it implies that the behavior of the program is consistent with a simple sequential view of the world. If all operations on instances of atomic types are sequentially consistent, then the behavior of a multithreaded program is as if all these operations were performed in some particular sequence by a single thread. This is by far the easiest memory ordering to understand, which is why it’s the default: all threads must see the same order of operations. This makes it easy to reason about the behavior of code written with atomic variables. You can write down all the possible sequences of operations by different threads, eliminate those that are inconsistent, and verify that your code behaves as expected in the others. It also means that operations can’t be reordered; if your code has one operation before another in one thread, then that ordering must be seen by all other threads.

From the point of view of synchronization, a sequentially consistent store synchronizes-with a sequentially consistent load of the same variable that reads the value stored. This provides one ordering constraint on the operation of two (or more) threads, but sequential consistency is more powerful than that. Any sequentially consistent atomic operations done after that load must also appear after the store to other threads in the system using sequentially consistent atomic operations. The example in listing 1 demonstrates this ordering constraint in action. This constraint doesn’t carry forward to threads that use atomic operations with relaxed memory orderings; they can still see the operations in a different order, so you must use sequentially consistent operations on all your threads in order to get the benefit.

This ease of understanding can come at a price, though. On a weakly ordered machine with many processors, it can impose a noticeable performance penalty, because the overall sequence of operations must be kept consistent between the processors, possibly requiring extensive (and expensive!) synchronization operations between the processors. That said, some processor architectures (such as the common x86 and x86-64 architectures) offer sequential consistency relatively cheaply, so if you’re concerned about the performance implications of using sequentially consistent ordering, check the documentation for your target processor architectures.

The following listing shows sequential consistency in action. The loads and stores to x and y are explicitly tagged with memory_order_seq_cst, although this tag could be omitted in this case because it’s the default.

Listing 1: Sequential Consistency Implies A Total Ordering

#include <atomic>

#include <thread>

#include <assert.h>

std::atomic<bool> x,y;

std::atomic<int> z;

void write_x()

{

x.store(true,std::memory_order_seq_cst); #1

}

void write_y()

{

y.store(true,std::memory_order_seq_cst); #2

}

void read_x_then_y()

{

while(!x.load(std::memory_order_seq_cst));

if(y.load(std::memory_order_seq_cst)) #3

++z;

}

void read_y_then_x()

{

while(!y.load(std::memory_order_seq_cst));

if(x.load(std::memory_order_seq_cst)) #4

++z;

}

int main()

{

x=false;

y=false;

z=0;

std::thread a(write_x);

std::thread b(write_y);

std::thread c(read_x_then_y);

std::thread d(read_y_then_x);

a.join();

b.join();

c.join();

d.join();

assert(z.load()!=0); #5

}

The assert (#5) can never fire because either the store to x (#1) or the store to y (#2) must happen first, even though it’s not specified which. If the load of y in read_x_then_y (#3) returns false, then the store to x must occur before the store to y, in which case the load of x in read_y_then_x (#4) must return true, because the while loop ensures that the y is true at this point. Because the semantics of memory_order_seq_cst require a single total ordering over all operations tagged memory_order_seq_cst, there’s an implied ordering relationship between a load of y that returns false (#3) and the store to y (#1). In a single total order, if one thread sees x==true and then subsequently sees y==false, this implies that the store to x occurs before the store to y in this total order.

Of course, because everything is symmetrical, it could also happen the other way around, with the load of x (#4) returning false, forcing the load of y (#3) to return true. In both cases, z is equal to 1. Both loads can return true, leading to z being 2, but under no circumstances can z be zero.

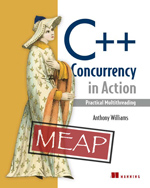

The operations and happens-before relationships are shown in figure 1. The dashed line shows the implied-ordering relationship required in order to maintain sequential consistency.

Figure 1 Sequential consistency and happens-before

Sequential consistency is the most straightforward and intuitive ordering, but it’s also the most expensive memory ordering because it requires global synchronization between all threads. On a multiprocessor system, this may require quite extensive and time-consuming communication between processors.

In order to avoid this synchronization cost, we need to step outside the world of sequential consistency and consider using other memory orderings.

Non-sequentially-consistent Memory Orderings

Once you step outside the nice sequentially consistent world, things start to get complicated. Probably the single biggest issue to come to grips with is the fact that there’s no longer a single global order of events. This means that different threads can see different views of the same operation, and any mental model you have of operations from different threads neatly interleaved one after the other must be thrown away. Not only do you have to account for things happening truly concurrently, but threads don’t have to agree on the order of events. In order to write (or even just to understand) any code that uses a memory ordering other than the default memory_order_seq_cst, it’s absolutely vital to get your head around this. It’s not just that the compiler can reorder the instructions. Even if the threads are running the same bit of code, they can disagree on the order of events because of operations in other threads in the absence of explicit ordering constraints, because the different CPU caches and internal buffers can hold different values for the same memory. It’s so important I’ll say it again: threads don’t have to agree on the order of events.

Not only do you have to throw out mental models based on interleaving operations, you also have to throw out mental models based on the idea of the compiler or processor reordering the instructions. In the absence of other ordering constraints, the only requirement is that all threads agree on the modification order of each individual variable. Operations on distinct variables can appear in different orders on different threads, provided the values seen are consistent with any additional ordering constraints imposed.

This is best demonstrated by stepping completely outside the sequentially consistent world and using memory_order_relaxed for all operations. Once you’ve come to grips with that, you can move back to acquire-release ordering, which allows you to selectively introduce ordering relationships between operations and claw back some of your sanity.

Relaxed Ordering

Operations on atomic types performed with relaxed ordering don’t participate in synchronizes-with relationships. Operations on the same variable within a single thread still obey happens-before relationships, but there’s almost no requirement on ordering relative to other threads. The only requirement is that accesses to a single atomic variable from the same thread can’t be reordered: once a given thread has seen a particular value of an atomic variable, a subsequent read by that thread can’t retrieve an earlier value of the variable. Without any additional synchronization, the modification order of each variable is the only thing shared between threads that are using memory_order_relaxed.

To demonstrate quite how relaxed your relaxed operations can be, you need only two threads, as shown in the following listing.

Listing 2: Relaxed Operations Have Very Few Ordering Requirements

#include <atomic>

#include <thread>

#include <assert.h>

std::atomic<bool> x,y;

std::atomic<int> z;

void write_x_then_y()

{

x.store(true,std::memory_order_relaxed); #1

y.store(true,std::memory_order_relaxed); #2

}

void read_y_then_x()

{

while(!y.load(std::memory_order_relaxed)); #3

if(x.load(std::memory_order_relaxed)) #4

++z;

}

int main()

{

x=false;

y=false;

z=0;

std::thread a(write_x_then_y);

std::thread b(read_y_then_x);

a.join();

b.join();

assert(z.load()!=0); #5

}

This time the assert (#5) can fire, because the load of x (#4) can read false, even though the load of y (#3) reads true and the store of x (#1) happens-before the store of y (#2). x and y are different variables, so there are no ordering guarantees relating to the visibility of values arising from operations on each.

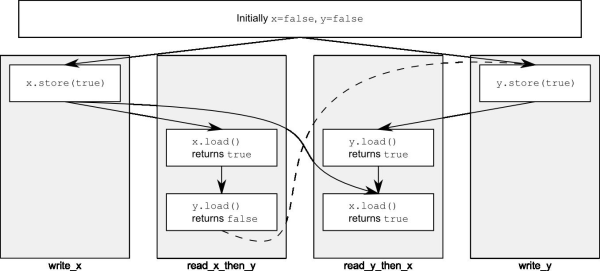

Relaxed operations on different variables can be freely reordered provided they obey any happens-before relationships they’re bound by (for example, within the same thread). They don’t introduce synchronizes-with relationships. The happens-before relationships from listing 2 are shown in figure 2, along with a possible outcome. Even though there’s a happens-before relationship between the stores and between the loads, there isn’t one between a store and a load, and so the loads can see the stores out of order.

Figure 2: Relaxed Atomics And Happens-before

Let’s look at a slightly more complex example with three variables and five threads in the next listing.

Listing 3: Relaxed Operations on Multiple Threads

#include <thread>

#include <atomic>

#include <iostream>

std::atomic<int> x(0),y(0),z(0); #1

std::atomic<bool> go(false); #2

unsigned const loop_count=10;

struct read_values

{

int x,y,z;

};

read_values values1[loop_count];

read_values values2[loop_count];

read_values values3[loop_count];

read_values values4[loop_count];

read_values values5[loop_count];

void increment(std::atomic<int>* var_to_inc,read_values* values)

{

while(!go) #3

std::this_thread::yield();

for(unsigned i=0;i<loop_count;++i)

{

values[i].x=x.load(std::memory_order_relaxed);

values[i].y=y.load(std::memory_order_relaxed);

values[i].z=z.load(std::memory_order_relaxed);

var_to_inc->store(i+1,std::memory_order_relaxed); #4

std::this_thread::yield();

}

}

void read_vals(read_values* values)

{

while(!go) #5

std::this_thread::yield();

for(unsigned i=0;i<loop_count;++i)

{

values[i].x=x.load(std::memory_order_relaxed);

values[i].y=y.load(std::memory_order_relaxed);

values[i].z=z.load(std::memory_order_relaxed);

std::this_thread::yield();

}

}

void print(read_values* v)

{

for(unsigned i=0;i<loop_count;++i)

{

if(i)

std::cout<<",";

std::cout<<"("<<v[i].x<<","<<v[i].y<<","<<v[i].z<<")";

}

std::cout<<std::endl;

}

int main()

{

std::thread t1(increment,&x,values1);

std::thread t2(increment,&y,values2);

std::thread t3(increment,&z,values3);

std::thread t4(read_vals,values4);

std::thread t5(read_vals,values5);

go=true; #6

t5.join();

t4.join();

t3.join();

t2.join();

t1.join();

print(values1); #7

print(values2);

print(values3);

print(values4);

print(values5);

}

#3 Spin, waiting for the signal

#5 Spin, waiting for the signal

#6 Signals to start execution of main loop

#7 Prints the final values

This is a really simple program in essence. You have three shared global atomic variables (#1) and five threads. Each thread loops 10 times, reading the values of the three atomic variables using memory_order_relaxed and storing them in an array. Three of the threads each update one of the atomic variables each time through the loop (#4), while the other two threads just read. Once all the threads have been joined, you print the values from the arrays stored by each thread (#7).

The atomic variable go (#2) is used to ensure that the threads all start the loop as near as possible. Launching a thread is an expensive operation, and without an explicit delay, the first thread may be finished before the last one has started. Each thread waits for go to become true before entering the main loop (#3, #5), and go is set to true only once all the threads have started (#6).

One possible output from this program is as follows:

(0,0,0),(1,0,0),(2,0,0),(3,0,0),(4,0,0),(5,7,0),(6,7,8),(7,9,8),(8,9,8),(9,9,10) (0,0,0),(0,1,0),(0,2,0),(1,3,5),(8,4,5),(8,5,5),(8,6,6),(8,7,9),(10,8,9),(10,9,10) (0,0,0),(0,0,1),(0,0,2),(0,0,3),(0,0,4),(0,0,5),(0,0,6),(0,0,7),(0,0,8),(0,0,9) (1,3,0),(2,3,0),(2,4,1),(3,6,4),(3,9,5),(5,10,6),(5,10,8),(5,10,10),(9,10,10),(10,10,10) (0,0,0),(0,0,0),(0,0,0),(6,3,7),(6,5,7),(7,7,7),(7,8,7),(8,8,7),(8,8,9),(8,8,9)

The first three lines are the threads doing the updating, and the last two are the threads doing just reading. Each triplet is a set of variables x, y and z in that order from one pass through the loop. There are a few things to notice from this output:

- The first set of values shows x increasing by one with each triplet, the second set has y increasing by one, and the third has z increasing by one.

- The x elements of each triplet only increase within a given set, as do the y and z elements, but the increments are uneven, and the relative orderings vary between all threads.

- Thread 3 doesn’t see any of the updates to x or y; it sees only the updates it makes to z. This, however, doesn’t stop the other threads from seeing the updates to z mixed in with the updates to x and y.

This is a valid outcome for relaxed operations, but it’s not the only valid outcome. Any set of values that’s consistent with the three variables each holding the values 0 to 10 in turn and that has the thread incrementing a given variable printing the values 0 to 9 for that variable is valid.

Understanding Relaxed Ordering

To understand how this works, imagine that each variable is a man in a cubicle with a notepad. On his notepad is a list of values. You can phone him and ask him to give you a value, or you can tell him to write down a new value. If you tell him to write down a new value, then he writes it at the bottom of the list. If you ask him for a value, then he reads you a number from the list.

The first time you talk to this man, if you ask him for a value, he may give you any value from the list he has on his pad at the time. If you then ask him for another value, he may give you the same one again or a value further down the list. He’ll never give you a value further up the list. If you tell him to write down a number and then subsequently ask him for a value, he’ll give you either the number you told him to write down or a number below that on the list.

Imagine for a moment that his list starts with values 5, 10, 23, 3, 1, and 2. If you ask for a value, you could get any of those. If he gives you 10, then the next time you ask, he could give you 10 again, or any of the later ones, but not 5. If you call him five times, he could say “10, 10, 1, 2, 2,” for example. If you tell him to write down 42, then he’ll add it to the end of the list. If you ask him for a number again, he’ll keep telling you “42” until he has another number on his list and he feels like telling it to you.

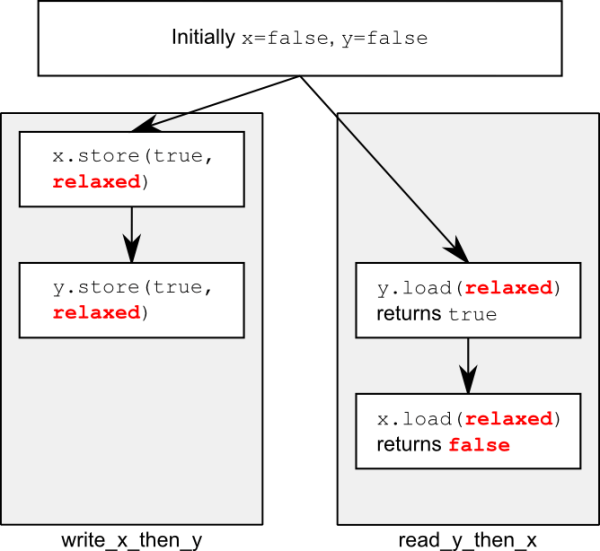

Now, imagine your friend Carl also has this man’s number. Carl can also phone him and either ask him to write down a number or ask for one, and he applies the same rules to Carl as he does to you. He has only one phone, so he can only deal with one of you at a time, so the list on his pad is a nice straightforward list. However, just because you got him to write down a new number doesn’t mean he has to tell it to Carl, and vice versa. If Carl asked him for a number and was told “23,” then just because you asked the man to write down 42, it doesn’t mean he’ll tell that to Carl next time. He may tell Carl any of the numbers 23, 3, 1, 2, 42, or even the 67 that Fred told him to write down after you called. He could very well tell Carl “23, 3, 3, 1, 67” without being inconsistent with what he told you. It’s like he keeps track of which number he told to whom with a little movable sticky note for each person, as in figure 3.

Figure 3: The Notebook of the Man in the Cubicle

Now imagine that there’s not just one man in a cubicle but a whole cubicle farm, with loads of men with phones and notepads. These are all our atomic variables. Each variable has its own modification order (the list of values on the pad), but there’s no relationship between them at all. If each caller (you, Carl, Anne, Dave, and Fred) is a thread, then this is what you get when every operation uses memory_order_relaxed. There are a few additional things you can tell the man in the cubicle, such as “write down this number, and tell me what was at the bottom of the list” (exchange) and “write down this number if the number on the bottom of the list is that; otherwise tell me what I should have guessed” (compare_exchange_strong), but that doesn’t affect the general principle.

If you think about the program logic from listing 2, then write_x_then_y is like some guy calling up the man in cubicle x and telling him to write true and then calling up the man in cubicle y and telling him to write true. The thread running read_y_then_x repeatedly calls up the man in cubicle y asking for a value until he says true and then calls the man in cubicle x to ask for a value. The man in cubicle x is under no obligation to tell you any specific value off his list and is quite within his rights to say false.

This makes relaxed atomic operations difficult to deal with. They must be used in combination with atomic operations that feature stronger ordering semantics in order to be useful for inter-thread synchronization. I strongly recommend avoiding relaxed atomic operations unless they’re absolutely necessary and even then using them only with extreme caution. Given the unintuitive results that can be achieved with just two threads and two variables in listing 2, it’s not hard to imagine the possible complexity when more threads and more variables are involved.

One way to achieve additional synchronization without the overhead of full-blown sequential consistency is to use acquire-release ordering.

Acquire-release ordering

Acquire-release ordering is a step up from relaxed ordering: there’s still no total order of operations, but it does introduce some synchronization. Under this ordering model, atomic loads are acquire operations (memory_order_acquire), atomic stores are release operations (memory_order_release), and atomic read-modify-write operations (such as fetch_add() or exchange()) are either acquire, release, or both (memory_order_acq_rel). Synchronization is pairwise, between the thread that does the release and the thread that does the acquire. A release operation synchronizes-with an acquire operation that reads the value written. This means that different threads can still see different orderings, but these orderings are restricted. The following listing is a rework of listing 1 using acquire-release semantics rather than sequentially consistent ones.

Listing 4: Acquire-release Doesn’t Imply A Total Ordering

#include <atomic>

#include <thread>

#include <assert.h>

std::atomic<bool> x,y;

std::atomic<int> z;

void write_x()

{

x.store(true,std::memory_order_release);

}

void write_y()

{

y.store(true,std::memory_order_release);

}

void read_x_then_y()

{

while(!x.load(std::memory_order_acquire));

if(y.load(std::memory_order_acquire)) #1

++z;

}

void read_y_then_x()

{

while(!y.load(std::memory_order_acquire));

if(x.load(std::memory_order_acquire)) #2

++z;

}

int main()

{

x=false;

y=false;

z=0;

std::thread a(write_x);

std::thread b(write_y);

std::thread c(read_x_then_y);

std::thread d(read_y_then_x);

a.join();

b.join();

c.join();

d.join();

assert(z.load()!=0); #3

}

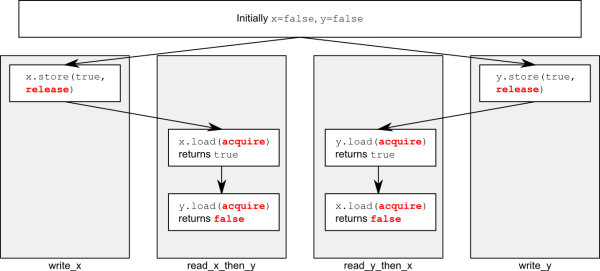

In this case, the assert (#3) can fire (just like in the relaxed-ordering case) because it’s possible for both the load of x (#2) and the load of y (#1) to read false. x and y are written by different threads, so the ordering from the release to the acquire in each case has no effect on the operations in the other threads.

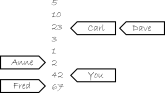

Figure 4 shows the happens-before relationships from listing 4, along with a possible outcome where the two reading threads each have a different view of the world. This is possible because there’s no happens-before relationship to force an ordering, as described previously.

Figure 4: Acquire-release And Happens-before

In order to see the benefit of acquire-release ordering, you need to consider two stores from the same thread, as in listing 2. If you change the store to y to use memory_order_release and the load from y to use memory_order_acquire as in the following listing, then you actually impose an ordering on the operations on x.

Listing 5: Acquire-release Operations can Impose Ordering on Relaxed Operations

#include <atomic>

#include <thread>

#include <assert.h>

std::atomic<bool> x,y;

std::atomic<int> z;

void write_x_then_y()

{

x.store(true,std::memory_order_relaxed); #1

y.store(true,std::memory_order_release); #2

}

void read_y_then_x()

{

while(!y.load(std::memory_order_acquire)); #3

if(x.load(std::memory_order_relaxed)) #4

++z;

}

int main()

{

x=false;

y=false;

z=0;

std::thread a(write_x_then_y);

std::thread b(read_y_then_x);

a.join();

b.join();

assert(z.load()!=0); #5

}

#1 Spin, waiting for y to be set to true

Eventually, the load from y (#3) will see true as written by the store (#2). Because the store uses memory_order_release and the load uses memory_order_acquire, the store synchronizes-with the load. The store to x (#1) happens-before the store to y (#2), because they’re in the same thread. Because the store to y synchronizes-with the load from y, the store to x also happens-before the load from y and by extension happens-before the load from x (#4). Thus the load from x must read true, and the assert (#5) can’t fire. If the load from y weren’t in a while loop, this wouldn’t necessarily be the case; the load from y might read false, in which case there’d be no requirement on the value read from x. In order to provide any synchronization, acquire and release operations must be paired up. The value stored by a release operation must be seen by an acquire operation for either to have any effect. If either the store at (#2) or the load at (#3) were a relaxed operation, there’d be no ordering on the accesses to x, so there’d be no guarantee that the load at (#4) would read true, and the assert could fire.

You can still think about acquire-release ordering in terms of our men with notepads in their cubicles, but you have to add more to the model. First, imagine that every store that’s done is part of some batch of updates, so when you call a man to tell him to write down a number, you also tell him which batch this update is part of: “Please write down 99, as part of batch 423.” For the last store in a batch, you tell this to the man too: “Please write down 147, which is the last store in batch 423.” The man in the cubicle will then duly write down this information, along with who gave him the value. This models a store-release operation. The next time you tell someone to write down a value, you increase the batch number: “Please write down 41, as part of batch 424.”

When you ask for a value, you now have a choice: you can either just ask for a value (which is a relaxed load), in which case the man just gives you the number, or you can ask for a value and information about whether it’s the last in a batch (which models a load-acquire). If you ask for the batch information, and the value wasn’t the last in a batch, then the man will tell you something like, “The number is 987, which is just a ‘normal’ value,” whereas if it were the last in a batch, then he’d tell you something like “The number is 987, which is the last number in batch 956 from Anne.” Now, here’s where the acquire-release semantics kick in: if you tell the man all the batches you know about when you ask for a value, then he’ll look down his list for the last value from any of the batches you know about and either give you that number or one further down the list.

How does this model acquire-release semantics? Let’s look at our example and see. First off, thread a is running write_x_then_y and says to the man in cubicle x, “Please write true as part of batch 1 from thread a,” which he duly writes down. Thread a then says to the man in cubicle y, “Please write true as the last write of batch 1 from thread a,” which he duly writes down. In the meantime, thread b is running read_y_then_x. Thread b keeps asking the man in box y for a value with batch information until he says “true.” He may have to ask many times, but eventually the man will say “true.” The man in box y doesn’t just say “true” though; he also says, “This is the last write in batch 1 from thread a.”

Now, thread b goes on to ask the man in box x for a value, but this time he says, “Please can I have a value, and by the way I know about batch 1 from thread a.” So now, the man from cubicle x has to look down his list for the last mention of batch 1 from thread a. The only mention he has is the value true, which is also the last value on his list, so he must read out that value; otherwise, he’s breaking the rules of the game.

One of the important properties of inter-thread happens-before is that it’s transitive: if A inter-thread happens-before B and B inter-thread happens-before C, then A inter-thread happens-before C. This means that acquire-release ordering can be used to synchronize data across several threads, even when the “intermediate” threads haven’t actually touched the data.

Transitive Synchronization with Acquire-release Ordering

In order to think about transitive ordering, you need at least three threads. The first thread modifies some shared variables and does a store-release to one of them. A second thread then reads the variable subject to the store-release with a load-acquire and performs a store-release on a second shared variable. Finally, a third thread does a load-acquire on that second shared variable. Provided that the load-acquire operations see the values written by the store-release operations to ensure the synchronizes-with relationships, this third thread can read the values of the other variables stored by the first thread, even if the intermediate thread didn’t touch any of them. This scenario is shown in the next listing.

Listing 6: Transitive Synchronization Using Acquire and Release Ordering

std::atomic<int> data[5];

std::atomic<bool> sync1(false),sync2(false);

void thread_1()

{

data[0].store(42,std::memory_order_relaxed);

data[1].store(97,std::memory_order_relaxed);

data[2].store(17,std::memory_order_relaxed);

data[3].store(-141,std::memory_order_relaxed);

data[4].store(2003,std::memory_order_relaxed);

sync1.store(true,std::memory_order_release); #1

}

void thread_2()

{

while(!sync1.load(std::memory_order_acquire)); #2

sync2.store(true,std::memory_order_release); #3

}

void thread_3()

{

while(!sync2.load(std::memory_order_acquire)); #4

assert(data[0].load(std::memory_order_relaxed)==42);

assert(data[1].load(std::memory_order_relaxed)==97);

assert(data[2].load(std::memory_order_relaxed)==17);

assert(data[3].load(std::memory_order_relaxed)==-141);

assert(data[4].load(std::memory_order_relaxed)==2003);

}

#1 Set sync1

#2 Loop until sync1 is set

#3 Set sync2

#4 Loop until sync2 is set

Even though thread_2 only touches the variables sync1 (#2) and sync2 (#3), this is enough for synchronization between thread_1 and thread_3 to ensure that the asserts don’t fire. First off, the stores to data from thread_1 happen-before the store to sync1 (#1), because they’re sequenced-before it in the same thread. Because the load from sync1 (#1) is in a while loop, it will eventually see the value stored from thread_1 and thus form the second half of the release-acquire pair. Therefore, the store to sync1 happens-before the final load from sync1 in the while loop. This load is sequenced before (and thus happens-before) the store to sync2 (#3), which forms a release-acquire pair with the final load from the while loop in thread_3 (#4). The store to sync2 (#3) thus happens-before the load (#4), which happens-before the loads from data. Because of the transitive nature of happens-before, we can chain it all together: the stores to data happen-before the store to sync1 (#1), which happens-before the load from sync1 (#2), which happens-before the store to sync2 (#3), which happens-before the load from sync2 (#4), which happens-before the loads from data. Thus the stores to data in thread_1 happen-before the loads from data in thread_3, and the asserts can’t fire.

In this case, we could combine sync1 and sync2 into a single variable by using a read-modify-write operation with memory_order_acq_rel in thread_2. One option would be to use compare_exchange_strong() to ensure that the value is updated only once the store from thread_1 has been seen:

std::atomic<int> sync(0);

void thread_1()

{

// ...

sync.store(1,std::memory_order_release);

}

void thread_2()

{

int expected=1;

while(!sync.compare_exchange_strong(expected,2,

std::memory_order_acq_rel))

expected=1;

}

void thread_3()

{

while(sync.load(std::memory_order_acquire)<2);

// ...

}

If you use read-modify-write operations, it’s important to pick the semantics you desire. In this case, you want both acquire and release semantics, so memory_order_acq_rel is appropriate, but you can use other orderings too. A fetch_sub operation with memory_order_acquire semantics doesn’t synchronize-with anything, even though it stores a value, because it isn’t a release operation. Likewise, a store can’t synchronize-with a fetch_or with memory_order_release semantics because the read part of the fetch_or isn’t an acquire operation. Read-modify-write operations with memory_order_acq_rel semantics behave as both an acquire and a release, so a prior store can synchronize-with such an operation, and it can synchronize-with a subsequent load, as is the case in this example.

If you mix acquire-release operations with sequentially consistent operations, the sequentially consistent loads behave like loads with acquire semantics, and sequentially consistent stores behave like stores with release semantics. Sequentially consistent read-modify-write operations behave as both acquire and release operations. Relaxed operations are still relaxed but are bound by the additional synchronizes-with and consequent happens-before relationships introduced through the use of acquire-release semantics.

Despite the potentially non-intuitive outcomes, anyone who’s used locks has had to deal with the same ordering issues: locking a mutex is an acquire operation, and unlocking the mutex is a release operation. With mutexes, you learn that you must ensure that the same mutex is locked when you read a value as it was locked when you wrote it, and the same applies here; your acquire and release operations have to be on the same variable to ensure an ordering. If data is protected with a mutex, then the exclusive nature of the lock means that the result is indistinguishable from that had the lock and unlock been sequentially consistent operations. Similarly, if you use acquire and release orderings on atomic variables to build a simple lock, then from the point of view of code that uses the lock, the behaviour will appear sequentially consistent, even though the internal operations are not.

If you don’t need the stringency of sequentially consistent ordering for your atomic operations, the pair-wise synchronization of acquire-release ordering has the potential for a much lower synchronization cost than the global ordering required for sequentially consistent operations. The trade-off here is the mental cost required to ensure that the ordering works correctly and that the non-intuitive behaviour across threads isn’t problematic.

Data Dependency with Acquire-release Ordering and memory_order_consume

In the introduction, I said that memory_order_consume was part of the acquire-release ordering model, but it was conspicuously absent from the preceding description. This is because memory_order_consume is special: it’s all about data dependencies, and it introduces the data-dependency nuances to the inter-thread happens-before relationship.

There are two new relations that deal with data dependencies: dependency-ordered-before and carries-a-dependency-to. Just like sequenced-before, carries-a-dependency-to applies strictly within a single thread and essentially models the data dependency between operations: if the result of an operation A is used as an operand for an operation B, then A carries-a-dependency-to B. If the result of operation A is a value of a scalar type such as an int, then the relationship still applies if the result of A is stored in a variable, and that variable is then used as an operand for operation B. This operation is also transitive, so if A carries-a-dependency-to B, and B carries-a-dependency-to C, then A carries-a-dependency-to C.

On the other hand, the dependency-ordered-before relationship can apply between threads. It’s introduced by using atomic load operations tagged with memory_order_consume. This is a special case of memory_order_acquire that limits the synchronized data to direct dependencies: a store operation A tagged with memory_order_release, memory_order_acq_rel, or memory_order_seq_cst is dependency-ordered-before a load operation B tagged with memory_order_consume if the consume reads the value stored. This is as opposed to the synchronizes-with relationship you get if the load uses memory_order_acquire. If this operation B then carries-a-dependency-to some operation C, then A is also dependency ordered before C.

This wouldn’t actually do us any good for synchronization purposes if it didn’t affect the inter-thread happens-before relation, but it does: if A is dependency ordered before B, then A also inter-thread happens-before B.

One important use for this kind of memory ordering is where the atomic operation loads a pointer to some data. By using memory_order_consume on the load and memory_order_release on the prior store, you ensure that the pointed-to data is correctly synchronized, without imposing any synchronization requirements on any other nondependent data. The following listing shows an example of this scenario.

Listing 7 Using std::memory_order_consume To Synchronize Data

struct X

{

int i;

std::string s;

};

std::atomic<X*> p;

std::atomic<int> a;

void create_x()

{

X* x=new X;

x->i=42;

x->s=”hello”;

a.store(99,std::memory_order_relaxed); #1

p.store(x,std::memory_order_release); #2

}

void use_x()

{

X* x;

while(!(x=p.load(std::memory_order_consume))) #3

std::this_thread::sleep(std::chrono::microseconds(1));

assert(x->i==42); #4

assert(x->s==”hello”); #5

assert(a.load(std::memory_order_relaxed)==99); #6

}

int main()

{

std::thread t1(create_x);

std::thread t2(use_x);

t1.join();

t2.join();

}

Even though the store to a (#1) is sequenced before the store to p (#2), and the store to p is tagged memory_order_release, the load of p (#3) is tagged memory_order_consume. This means that the store to p only happens-before those expressions that are dependent on the value loaded from p. This means that the asserts on the data members of the X structure (#4, #5) are guaranteed not to fire, because the load of p carries a dependency to those expressions through the variable x. On the other hand, the assert on the value of a (#6) may or may not fire: this operation isn’t dependent on the value loaded from p, and so there’s no guarantee on the value that’s read. This is particularly apparent because it’s tagged with memory_order_relaxed, as you’ll see.

Sometimes, you don’t want the overhead of carrying the dependency around. You want the compiler to be able to cache values in registers and reorder operations to optimize the code rather than fussing about the dependencies. In these scenarios, you can use std::kill_dependency() to explicitly break the dependency chain. std::kill_dependency() is a simple function template that copies the supplied argument to the return value but breaks the dependency chain in doing so. For example, if you have a global read-only array, and you use std::memory_order_consume when retrieving an index into that array from another thread, then you can use std::kill_dependency() to let the compiler know that it doesn’t need to reread the contents of the array entry, as in the following example:

int global_data[]={ … };

std::atomic<int> index;

void f()

{

int i=index.load(std::memory_order_consume);

do_something_with(global_data[std::kill_dependency(i)]);

}

Of course, you wouldn’t normally use std::memory_order_consume at all in such a simple scenario, but you might call on std::kill_dependency() in a similar situation with more complex code. You must remember that this is an optimization, so it should only be used with care and where profiling has demonstrated the need.

Summary

We’ve covered the C++0x memory model and the atomic operations that provide the basis for synchronization between threads. Specifically, we discussed the complex details of the various memory-ordering options.

Comments